Data modelling and schema design in Adobe Experience Platform

When implementing a Customer Data Platform with Adobe Experience Platform (AEP), the most vital part of the design process is data modeling and building your schemas on the platform. A good schema design will make your setup shine. A bad schema design will give you headaches, cost you a lot of time, and will surely lead to painful restructuring.

Adobe provides a very helpful guideline for designing a schema. Here are the insights I gained along the way when working with the Adobe Experience Platform.

Schema design in Adobe Experience Platform

Schema like in any database is the structure of the data you send to AEP. But it doesn’t just define the structure of how your data is stored. It will also make a difference in how your field structure is shown in the segmentation UI to a marketer. It will have a huge influence on how fast your segment evaluation will run. Some design patterns will lead to segments being evaluated on batch, and others will be in real-time. To understand schema design, it is important to understand a few concepts first.

A schema in AEP is a JSON structure that defines the data fields and data types in a hierarchical manner.

Schema Classes

When building Schema, the first question you got to ask yourself about the underlying data is what type of data am I dealing with. Then you can decide which class you need to use to build the schema. There are two main prebuilt classes in AEP that you will be using most of the time.

Figure 1: Options shown in the dropdown menu when creating a new Schema.

Both these schema classes come with an existing field structure and prebuild functionality.

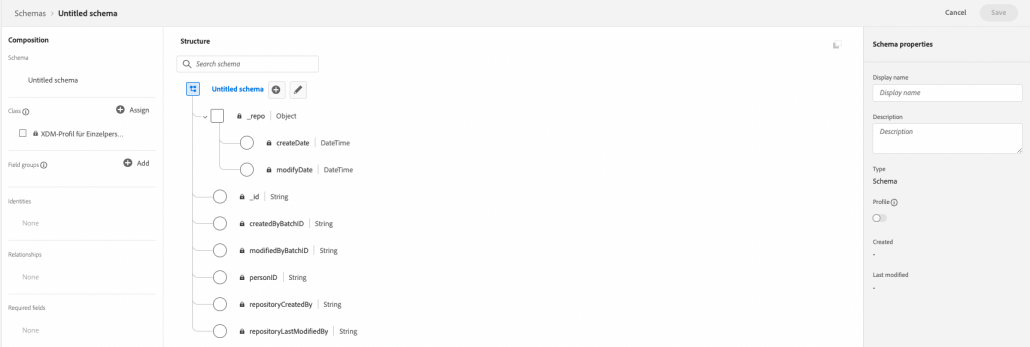

XDM Individual Profile is used for record data. Profile data is mutable and is attached to a person, like a first name, email address, or consent status. These are stored as a singular record and always reflect the current status. Once they are changed, it is not possible to look up what these values were in the past.

Figure 2: XDM Individual Profile Schema

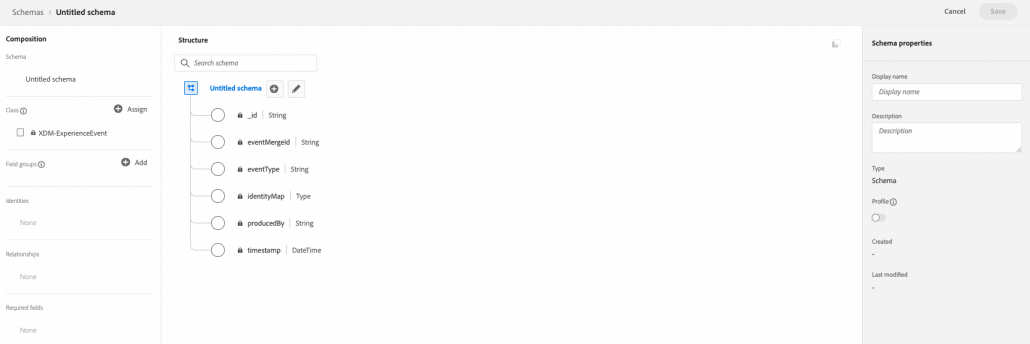

XDM ExperienceEvent is used for event data. Events are occurrences that happen and then never change. They are immutable. A user logged into your platform, a user created an account. Each of these events needs a timestamp when it happened. Also, a universally unique event _id per event is very important. It is important to note that this ID is unique across all datasets in the platform. You could use a UUID or use a hashed combination of the dataset name and a unique ID from your imports or streams.

Figure 3: XDM ExperienceEvent Schema

It is very important to know that AEP is an additive platform, so even though deleting datasets and batches is possible, you will not be able to modify any of the events. And even when deleting a dataset, the underlying ID graph can only be deleted via a support request as of now.

Be aware that when deleting data from ID graph via support, you need to tell them the related dataset ID. Which is kind of hard when the dataset is deleted. So I would recommend noting that down before deletion.

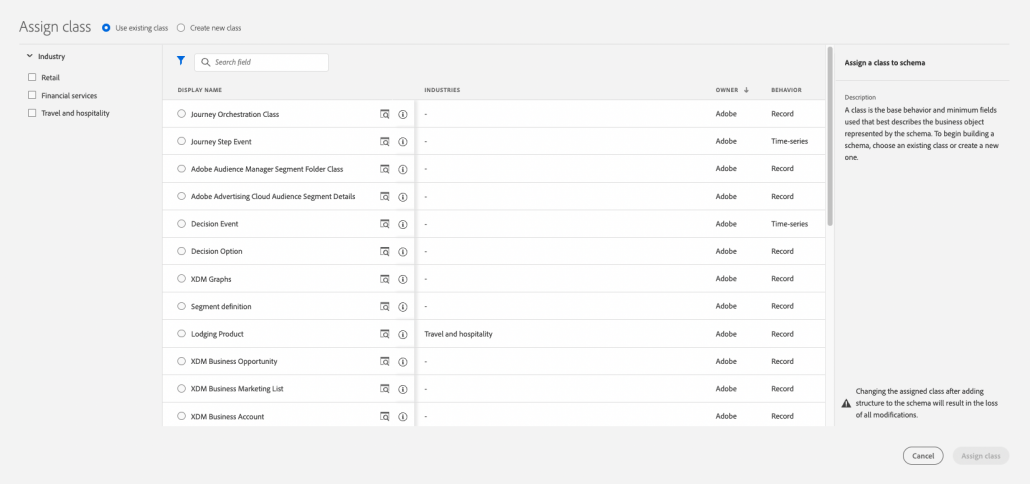

Other XDM Classes: There are also classes created by Adobe that are primarily used for lookup purposes. For example, you can use the product class. To attach product Information to your events. This can be very useful to attach mutable data to events. But keep in mind that when using these lookups datasets when doing segmentation. The segment is evaluated on batch. So currently just once daily.

Figure 4: Other XDM Classes as listed in the Adobe Experience Platform’s Interface

Keep in mind that it is a very good idea to stick to the standard classes in AEP unless you have a very good reason to do so.

Field Groups (Formerly known as Mixins)

Field Groups are the building blocks for your additional schema fields. These make fields reusable across multiple schemas.

When adding field groups and adding fields, I follow the following guidelines:

Check if there is already a preexisting field group that fits my purpose

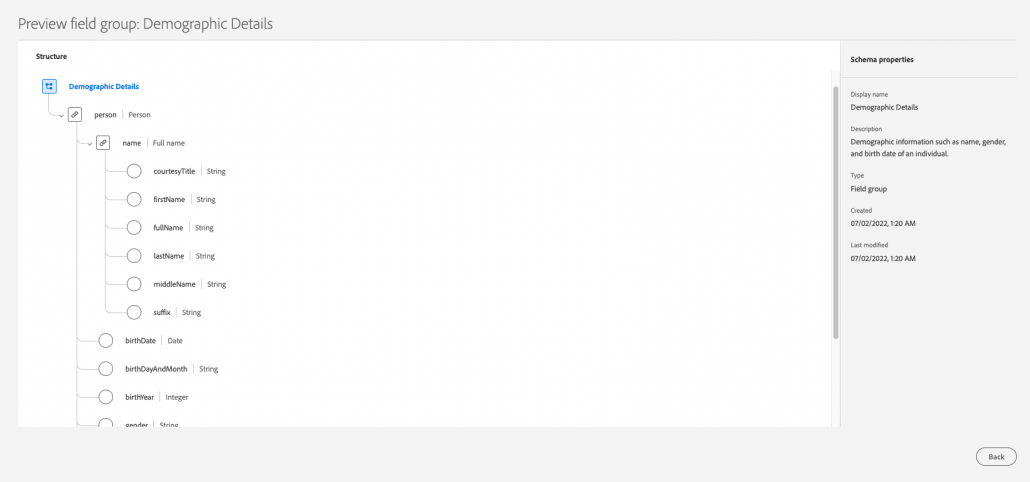

Adobe has provided a big set of existing field groups. Some (like demographic details) have proven very helpful to me.

Figure 5: The demographic data field group

Also, some I would highly recommend using for example AEP Web SDK ExperienceEvent field group will be your starting point when Integrating Adobe Web SDK.

Generally, even though sometimes a bit too over-engineered, these can help to understand reusability and the structure that adobe intended when building schema.

Reuse re-occurring fields via field groups

If you don’t find anything that fits your purpose, build your own field group with reusability in mind. So if any of the other schemas share the same fields, a field group is your friend.

Use a separate field group for all the fields that are just specific to that schema

Only if a field is specific to just this schema, add it to a field group specific to this Schema.

This helps to build a reusable but still flexible schema architecture.

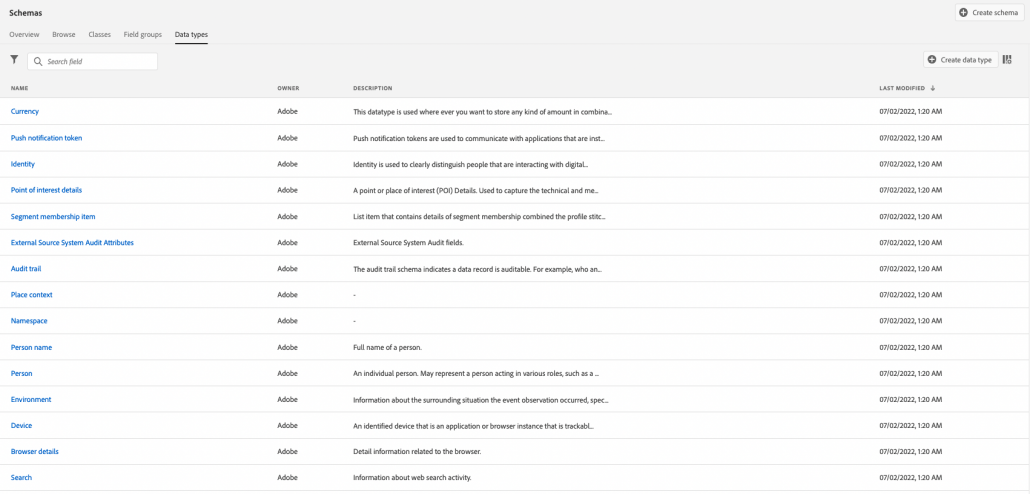

Data Types

Each field allows assigning a data type. Apart from the standard data types like string, integer, boolean, and double. There are prebuilt data types by Adobe, for example for an email address field. I would always recommend taking a look here first before building your own data type and reinventing the wheel.

You can use objects to build a nested structure. And you can use arrays to add multiple values to one event field. But keep in mind that currently when batch importing CSV or parquet files it is not possible to fill in, multiple values in an array. Also, there is no push function available to add a value. But you would have to completely replace the array’s content.

Figure 5: Existing data types as presented in the user interface

Custom Data Types can help you to build your own reusable JSON structure. So you can use objects to structure your data in a nested way. And share this structure across Field Groups. Data Types allow you to reuse data structures beyond the first level. So they are a bit more flexible than a field group.

7 additional things that helped me along the way:

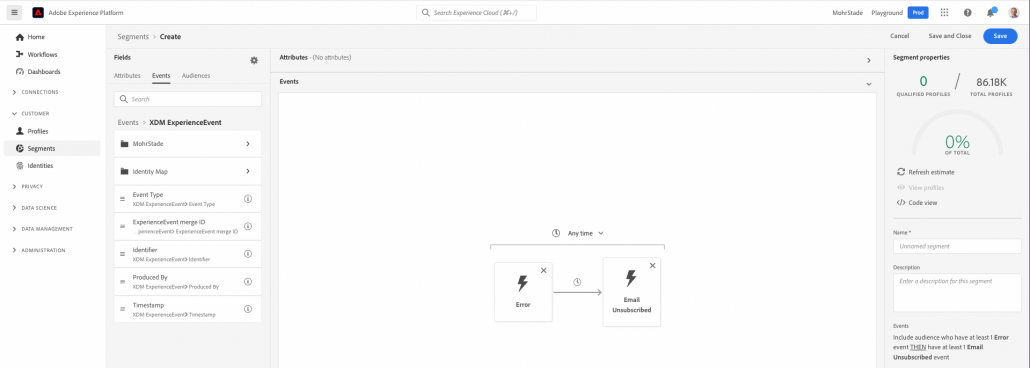

1. Check out your results in the Segment builder with some data

I always work with a playground sandbox besides a dev sandbox, which is my wild west area to experiment and build schema.

There I add sample data and then rebuild the schema and dataset whenever necessary.

Then I built some segments to experience what I built firsthand. It makes sense to involve stakeholders as early as possible in this part of the process. So they can give you valuable feedback. Things that seem self-evident and logical to you might not be to everyone.

Figure 6: Segment Builder User Interface

I would always check out the resulting Union Schema structure once you have activated data for profile use, so you can have a full view of your schema structure.

2. Agree on a naming convention and make use of description fields

Using naming Conventions and proper naming can help to keep things clear. I prefer snake_case. But you can use whatever you like here. I also use Capitalized Noun for naming my Profile and Lookup Classes (e.g. CRM_Profile, Web_SDK_Profile).

For events, I used a verb in all lowercase (e.g. web_sdk_event, login)

When naming a dataset, I used the name of the underlying Schema and added the Source of the Data (e.g. CRM_Profile_msDynamics, login_productDB)

I think what is important is that your team agrees on a useful convention that will be clear without much explanation.

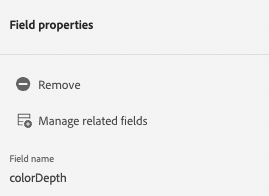

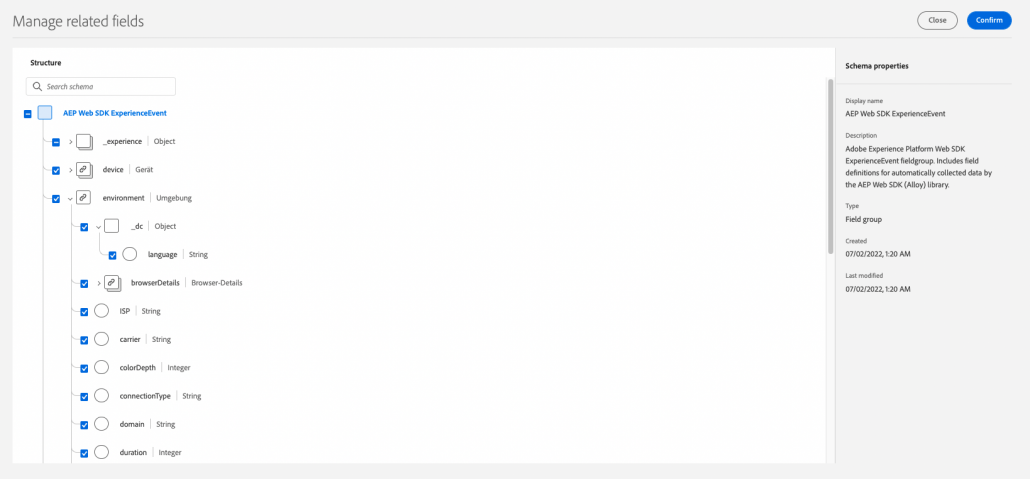

3. Simplify via managing related fields if needed

The prebuilt structure can be a bit overblown to get started. So keep in mind that you can still hide fields within a schema. This feature can also be used to hide fields that should no longer be used, as otherwise there is no way of removing fields once data is flowing. But you can also use this to share Fields between Schemas where there are only minor differences. Even though, most of the time, it makes sense to use field groups in that case.

Figure 7: Click Manage related fields in the fields’ property pane to hide some of the fields in the field group for this specific schema

Figure 8: Related fields can be hidden by unchecking the checkboxes

4. Visualize Schema in an ERD tool

Once you got a basic idea of your schema design and got a grip on how XDM works, it often makes sense to go back to the drawing board. And visualize your full schema in an ERD tool. This can help get a better idea of your data. And it can be useful for documentation purposes as well.

I have used vuerd for VS Code, which is open source and free.

5. Keep it Simple, Stupid!

Generally, my tip would be to keep it simple. As Adobe generally can feel a bit over-engineered and not everybody needs everything that comes with the platform.

Adobe Schemas can sometimes be a bit overwhelming. So if this structure doesn’t fit, use your own, keeping in mind future possible extensions. You can use objects to build a nested structure, but I would only resolve to that if there are many subfields, and it makes sense to have a tree structure.

6. Make use of the API

Even though, when building schema, the UI is my go-to place for the first few drafts.

AEP is an API-first platform, that can be felt sometimes for good and for worse. Refining Schema via the API can speed up the process and can uncover some additional functionality not available in the UI.

For example, reusing Fields Groups Across Schema Classes is currently only possible via the API. But can save you some work. Via changing the meta:intendedToExtend property, you can make a Field Group available cross schema.

"meta:intendedToExtend":

"https://ns.adobe.com/xdm/context/profile",

"https://ns.adobe.com/xdm/context/experienceevent"For this purpose, you can use the Postman Collection provided by Adobe.

Or even better, Julien Piccini has built an immensely helpful Python Wrapper for the API. I used this to download my field groups as files. There I can modify, and upload my changes. The most useful feature has been the option to copy field groups to a new environment.

7. Think about your current data structure and possible future extensions

Schema can be changed at will as long as there are no connected data sets. But as soon as data is flowing, removing fields and changing a data type for the underlying data is not possible anymore. So think about your data types and structure. Even though sending your data type as an integer for example is ok for now, things might change, and you will need to send it as a double. Using objects or field groups in some cases makes sense to allow adding additional subfields, even if currently there is no need to. So don’t just think about the current data, but for possible extensions later. No one can predict the future, but at least try to think of what might be your next step when evolving the platform. Evolving your schema is possible, but sometimes can become very painful and confusing with lots of deprecated fields.